Introduction: Machine Learning Algorithms

Machine learning algorithms power the tools we use daily—from personalized Netflix recommendations to fraud detection in banking. Yet for beginners, the world of machine learning algorithms can feel overwhelming. Which ones should you focus on first? How do they actually work in practice? And what real-world problems do they solve? This guide breaks down the most essential machine learning algorithms, explains their strengths and weaknesses, and shows you how they connect to scenarios you already know. Whether you’re a student, a developer just entering the field, or simply curious about AI, this article will help you build a solid foundation.

Why Start with Algorithms?

Think of algorithms as recipes. Each one gives instructions for turning raw data into useful predictions or insights. Some recipes are quick and simple, while others are more complex but deliver richer flavors.

By learning a handful of core machine learning algorithms, you’ll cover most beginner-to-intermediate use cases. Once you’re comfortable with these, you’ll have the confidence to explore advanced techniques like deep learning or reinforcement learning.

1. Linear Regression – Predicting Continuous Outcomes

Linear regression is often the first algorithm taught in machine learning courses, and for good reason: it’s simple, powerful, and widely used. If you want a step-by-step beginner’s tutorial, this guide on linear regression explains the concepts in depth.

How it works:

It draws a straight line (or plane, in higher dimensions) through your data to best predict a continuous outcome.

Example scenario:

Imagine you’re a landlord who wants to predict rental prices in your city. By feeding the algorithm data about square footage, location, and amenities, it can estimate the monthly rent for a new property.

Why it matters for beginners:

- Easy to visualize and understand.

- Helps grasp the idea of modeling relationships.

- Forms the basis for more advanced algorithms like logistic regression.

2. Logistic Regression – Predicting Categories

Despite the name, logistic regression is used for classification problems, not regression.

How it works:

It predicts probabilities and assigns data points into categories (e.g., yes/no, spam/not spam).

Example scenario:

A bank wants to determine whether a customer will default on a loan. Logistic regression can analyze past repayment data and classify new applicants as low or high risk.

Why it’s important:

- Builds on the intuition of linear regression.

- A strong baseline model for many classification tasks.

- Commonly used in medical, finance, and marketing applications.

3. Decision Trees – Splitting Data into Decisions

Decision trees are intuitive because they mimic human decision-making. A clear walkthrough is available in this GeeksforGeeks article on decision trees.

How it works:

The algorithm asks a series of “yes or no” questions to split data into branches until it reaches a prediction.

Example scenario:

An online store could use a decision tree to predict whether a customer will buy based on browsing history: “Did they add an item to the cart?” → “Did they view shipping options?”

Strengths:

- Easy to interpret and visualize.

- Handles both classification and regression.

Limitations:

- Can overfit data (predict perfectly on training data but fail on new data).

4. Random Forest – Combining Many Trees

Random forest is an ensemble method—it combines multiple decision trees to improve accuracy. You can read IBM’s explanation of random forest to see how it’s applied in enterprise solutions.

How it works:

It builds many decision trees, each trained on a random sample of data, and then averages their predictions.

Example scenario:

Think of a random forest as asking 100 doctors for a diagnosis instead of relying on just one. The majority vote reduces the chance of error.

Why it’s useful:

- More robust than a single tree.

- Works well with messy, real-world data.

- Often outperforms simpler models with little tuning.

5. K-Nearest Neighbors (KNN) – Learning by Proximity

KNN is one of the simplest machine learning algorithms, yet surprisingly effective.

How it works:

To predict the outcome for a new data point, KNN looks at the “k” closest points in the dataset and assigns the majority label (classification) or average value (regression).

Example scenario:

Imagine moving to a new city and trying to guess the price of your house. You’d probably check the prices of nearby houses with a similar size and features. That’s essentially KNN in action.

Pros:

- No training required (lazy learning).

- Intuitive and easy to implement.

Cons:

- Slower on large datasets.

- Sensitive to irrelevant features.

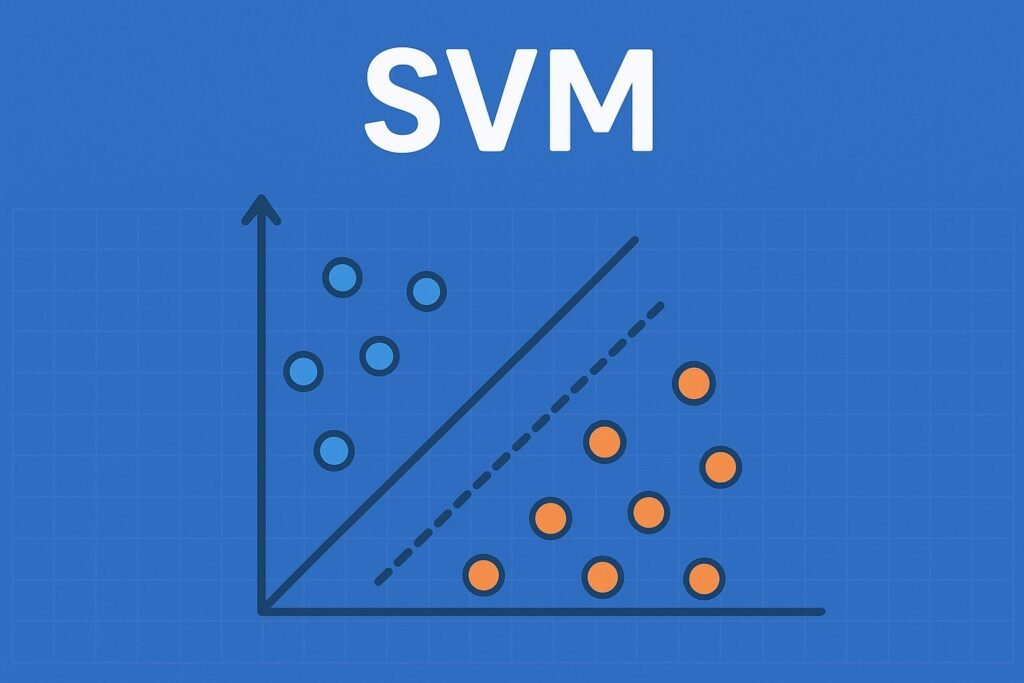

6. Support Vector Machines (SVM) – Drawing Clear Boundaries

SVM is a bit more advanced, but still essential for beginners to understand.

How it works:

It finds the “best boundary” (called a hyperplane) that separates classes in the dataset.

Example scenario:

In email filtering, SVM might draw a boundary that separates spam from non-spam emails based on word frequency, sender domain, and other features.

Why it’s powerful:

- Effective for high-dimensional data (like text or images).

- Works well when classes are clearly separated.

7. Naive Bayes – Probabilistic Classifier

Naive Bayes is based on Bayes’ theorem, which uses probabilities to classify data. You can check out Analytics Vidhya’s tutorial on Naive Bayes for practical insights.

How it works:

It assumes features are independent (a “naive” assumption) and calculates the likelihood that a data point belongs to a class.

Example scenario:

Spam filters often use Naive Bayes: if an email contains words like “win,” “free,” or “lottery,” the probability it’s spam increases.

Strengths:

- Extremely fast and efficient.

- Works well with text classification.

8. K-Means Clustering – Finding Hidden Groups

So far, we’ve covered supervised algorithms. K-means is one of the most popular unsupervised learning algorithms.

How it works:

It groups data into “k” clusters by minimizing the distance between points and their cluster center.

Example scenario:

A marketing team could use K-means to segment customers into groups: frequent buyers, occasional buyers, and one-time shoppers.

Why it’s useful:

- Helps discover hidden patterns in unlabeled data.

- A go-to method for customer segmentation, image compression, and anomaly detection.

Comparison Table of Key Algorithms

Here’s a quick summary of the algorithms we’ve covered:

| Algorithm | Type | Best For | Beginner-Friendly? |

|---|---|---|---|

| Linear Regression | Supervised | Predicting continuous values | Yes |

| Logistic Regression | Supervised | Binary classification | Yes |

| Decision Tree | Supervised | Classification & regression | Yes |

| Random Forest | Supervised | Complex problems, higher accuracy | Yes |

| K-Nearest Neighbors | Supervised | Classification/regression, small datasets | Yes |

| Support Vector Machine | Supervised | High-dimensional classification | Moderate |

| Naive Bayes | Supervised | Text/data classification | Yes |

| K-Means Clustering | Unsupervised | Grouping unlabeled data | Yes |

Practical Tips for Beginners

- Start simple – Don’t jump straight into SVM or neural networks. Practice with linear regression and decision trees first.

- Use small datasets – Kaggle offers beginner-friendly datasets that won’t overwhelm your computer or your brain.

- Focus on intuition, not just code – Before implementing an algorithm, make sure you can explain how it works in plain language.

- Experiment often – Try predicting your own electricity bill, workout progress, or even coffee consumption. Personal projects stick better than abstract exercises.

Key Takeaways

- Machine learning algorithms are like recipes: each has strengths, weaknesses, and ideal use cases.

- Start with regression, classification, and clustering basics before tackling advanced models.

- Real-world practice is key—don’t just read about algorithms; apply them to data you care about.

Conclusion

Learning machine learning doesn’t have to be intimidating. By mastering these eight machine learning algorithms, you’ll cover 80% of what beginners need to know. From predicting house prices to detecting spam, these algorithms form the backbone of modern AI applications.

The next step? Open a notebook, pick a dataset, and start experimenting. The more you practice, the more these algorithms will feel like old friends rather than abstract math.